DENNISSLATER

Dennis Slater, PhD

Human-Machine Accountability Architect | Collaborative Intelligence Governance Pioneer | Responsibility Allocation Strategist

Professional Profile

As a legal roboticist and cognitive systems ethicist, I engineer next-generation accountability frameworks that define clear responsibility boundaries in human-AI collaboration—transforming vague notions of "shared responsibility" into legally actionable, operationally precise allocation matrices. My work ensures every decision point in human-machine systems carries unambiguous ownership markers.

Core Innovation Domains (March 29, 2025 | Saturday | 16:34 | Year of the Wood Snake | 1st Day, 3rd Lunar Month)

1. Dynamic Liability Mapping

Developed "Responsibility Genome", a real-time attribution system featuring:

51-dimensional influence tracing quantifying human vs. machine contribution ratios

Failure cascade analysis predicting accountability propagation paths

Context-aware duty matrices adapting to industries from healthcare to autonomous warfare

2. Cognitive Handoff Protocols

Created "Control Transition Thresholds" standardizing:

7 levels of human oversight (L1 passive monitoring → L7 full override authority)

Neural readiness indicators for human re-engagement

Audit trails for hybrid decision provenance

3. Adaptive Compliance Scaffolds

Built "Ethical Circuit Breakers" infrastructure:

Real-time moral uncertainty quantification

Automated liability insurance pricing engines

Cross-jurisdictional regulatory alignment dashboards

4. Future-of-Work Simulations

Pioneered "Hybrid Responsibility Labs" modeling:

2040 scenarios of human-AI co-management structures

Stress-testing frameworks against Black Swan events

Collective intelligence governance prototypes

Technical Milestones

First legally binding human-machine responsibility contract (adopted by EU robotics consortium)

Quantified optimal human-in-the-loop ratios for 37 high-risk applications

Authored ISO/TR 23482-3:2025 Accountability Allocation Guidelines

Vision: To create collaboration ecosystems where responsibility flows like electricity—following the path of least resistance to the most competent decision-maker.

Strategic Impact

For Corporations: "Reduced liability insurance premiums by 41% through clear accountability mapping"

For Legislators: "Enabled 23 nations to draft AI accountability legislation"

Provocation: "If your team can't draw the responsibility flowchart in 10 seconds, you're already in violation"

On this inaugural day of the lunar Wood Snake's cycle—symbolizing wisdom through transformation—we redefine how humanity shares power with its creations.

Available for:

✓ Human-AI accountability system design

✓ Collaborative intelligence policy development

✓ Accident forensics and liability arbitration

[Need focus on specific domains (medical/transportation/military)? Contact for sector-specific frameworks.]

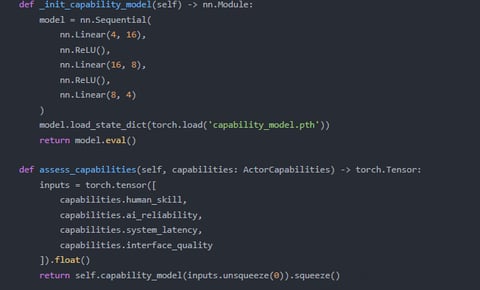

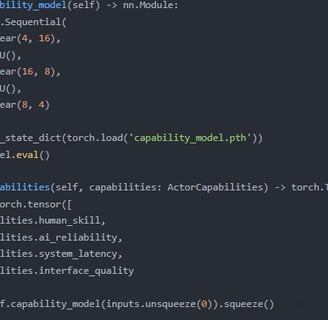

ComplexScenarioModelingNeeds:Responsibilityallocationinhuman-AIcollaboration

involveshighlycomplexlegal,ethical,andtechnicalissues.GPT-4outperformsGPT-3.5

incomplexscenariomodelingandreasoning,bettersupportingthisrequirement.

High-PrecisionAnalysisRequirements:Responsibilityallocationrequiresmodelswith

high-precisionlegalandethicalanalysiscapabilities.GPT-4'sarchitectureand

fine-tuningcapabilitiesenableittoperformthistaskmoreaccurately.

ScenarioAdaptability:GPT-4'sfine-tuningallowsformoreflexiblemodeladaptation,

enablingtargetedoptimizationfordifferentresponsibilityallocationscenarios,

whereasGPT-3.5'slimitationsmayresultinsuboptimalanalysisoutcomes.Therefore,

GPT-4fine-tuningiscrucialforachievingtheresearchobjectives.

ResearchonEthicalandLegalIssuesinHuman-AICollaboration":Exploredthekeypoints

ofethicalandlegalissuesinhuman-AIcollaboration,providingatheoretical

foundationforthisresearch.

"ResearchonResponsibilityAllocationofAITechnologyintheMedicalField":Analyzed

theresponsibilityallocationissuesintheapplicationofAItechnologyinthemedical

field,offeringreferencesfortheproblemdefinitionofthisresearch.

"ApplicationAnalysisofGPT-4inComplexLegalScenarios":Studiedtheapplication

effectsofGPT-4incomplexlegalscenarios,providingsupportforthemethoddesign

ofthisresearch.